I have been working on a nodejs web service over the past few months and have been using the excellent nodejs style guide as a first point of reference when handling exceptions.

http://caolanmcmahon.com/posts/nodejs_style_and_structure/

Based on that style guide and in particular point 7 Catch Errors in Sync Calls we have the following sample function:

function readJson(filename, callback) {

fs.readFile(filename, function (err, content) {

if (err) {

return callback(err);

}

try {

var data = JSON.parse(content.toString());

} catch (e) {

return callback(e);

}

return callback(null, data);

});

}

We need to be careful to catch any errors and convert them back to errors returned by the callback or bad stuff starts to happen. So I have written umpteen functions this way and it was working really well for me until I became aware of the following:

https://github.com/joyent/node/wiki/Best-practices-and-gotchas-with-v8

To summarise, try catch blocks can impact performance significantly in node under certain circumstances.

It seems that the V8 javascript engine has an optimising compiler called Crankshaft that identifies parts of your code that are getting called regularly and does it's best to optimise them. Unfortunately when using an inline try catch crankshaft is unable to optimise the code.

Now the code above does not suffer from any performance issues because the JSON.parse function still get's optimised. The problem comes about if we want to do some expensive synchronous operations such as allocating a large number of variables. The following code would incur a large performance overhead.

function readJsonWithTryCatch(filename, callback) {

fs.readFile(filename, function (err, content) {

if (err) {

return callback(err);

}

var data = JSON.parse(content.toString());

try {

var s = 0;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

} catch (e) {

return callback(e);

}

return callback(null, data);

});

}

I have tested the above function using jsperf both with and without the try catch and I get the following results with benchmark.js on node 0.8.22:

With j_ = 10000000

Control (readJSONWithoutTryCatch) x 18.71 ops/sec ±13.16% (48 runs sampled) readJsonWithTryCatch x 10.73 ops/sec ±0.50% (55 runs sampled)

On my machine I had to make j_ quite large to have an impact. The performance of the functions was essentially the same with j_ = 1,000,000 but diverged for j_ = 10,000,000 . Strangely, the variance of results is higher for the function without the try catch so at values of j_ = 1,000,000, the function with the try catch performance is marginally better.

With j_ = 1000000

Control (readJSONWithoutTryCatch) x 105 ops/sec ±4.58% (49 runs sampled) readJsonWithTryCatch x 103 ops/sec ±0.57% (82 runs sampled) Fastest is readJsonWithTryCatch

So, the first thing I have got to wonder is whether this even matters much and if it is really just premature optimisation.

So, the next thing I tested was placing the expensive computation in an external function which will allow the V8 engine to optimise it. So I tested the following code against our control.

function intensive(){

var s = 0;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

}

function readJsonWithTryCatchIntensive(filename, callback) {

fs.readFile(filename, function (err, content) {

if (err) {

return callback(err);

}

try {

var data = JSON.parse(content.toString());

intensive();

} catch (e) {

return callback(e);

}

return callback(null, data);

});

}

the results were:

Control (readJSONWithoutTryCatch) x 18.75 ops/sec ±13.12% (48 runs sampled) readJsonWithTryCatchIntensive x 27.54 ops/sec ±0.46% (68 runs sampled) Fastest is readJsonWithTryCatchIntensive

Hang On! I wasn't expecting to outperform the control.

After much head scratching, I have come to the conclusion that the control isn't performing well because the callback passed to the readFile function is declared each and every time the control function is called. The v8 runtime is having trouble optimising it because the function doesn't hang around long enough for it to become hot. As far as V8 is concerned, this is a new function every time it is declared So, really we need to create a new control case. The control should be as follows instead.

function intensive(){

var s = 0;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

}

function readJsonWithoutTryCatchIntensive(filename, callback) {

fs.readFile(filename, function (err, content) {

if (err) {

return callback(err);

}

var data = JSON.parse(content.toString());

intensive();

return callback(null, data);

});

}

and now finally we get the same results for both:

Control (readJSONWithoutTryCatchIntensive) x 27.49 ops/sec ±0.38% (68 runs sampled) readJsonWithTryCatchIntensive x 27.44 ops/sec ±0.44% (68 runs sampled) Fastest is Control (readJSONWithoutTryCatchIntensive) ,readJsonWithTryCatchIntensive

As a final comparison let's get our worst performer and compare it with the best with lower values of j_ . We know that with j_ = 10,000,000 that our worst performer (readJsonWithTryCatch) gets 10.73 ops/sec almost 3 times slower than putting it in an external function. What about with j_ = 1,000,000?

Control (readJSONWithoutTryCatchIntensive) x 256 ops/sec ±0.75% (87 runs sampled) readJsonWithTryCatchIntensive x 256 ops/sec ±0.76% (87 runs sampled) readJsonWithTryCatch x 105 ops/sec ±4.56% (49 runs sampled) Fastest is Control (readJSONWithoutTryCatchIntensive) ,readJsonWithTryCatchIntensive

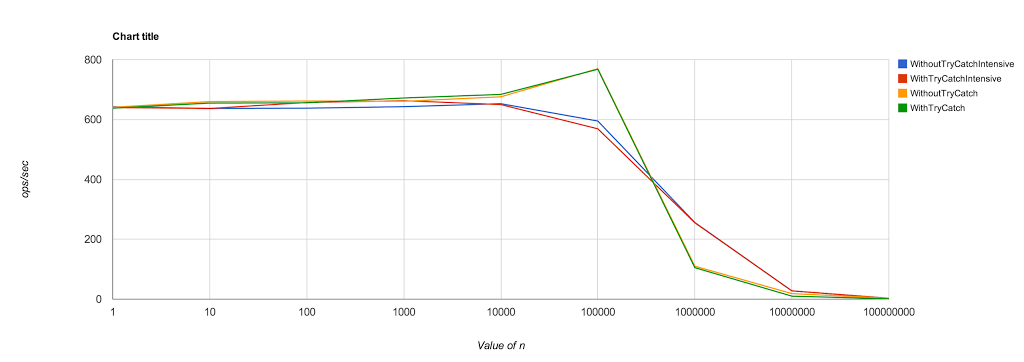

Let's have a look at a chart of performance at various values of n.

The source code used to drive the results above are in this GIST.

I have no idea why performance begins to improve for a couple of our functions at larger values of n, and then fall off a cliff. (I repeated these tests over and over and ended up with similar results). Ignoring that phenomenon for a moment (it's a relatively small effect) it seems that the functions with the external intensive function perform significantly better when there is a significant amount of work to do but when there is less involved, it doesn't really matter. This testing also shows that you need to be careful doing significant amounts of work in anonymous functions or other functions that come in and out of scope regularly.

My guess is that in most cases it's not going to make a huge difference to the performance of your code, but I am still inclined to search for a solution that has less boilerplate code and doesn't have the performance gotchas. In my next post I will have a look at how Node.js domains might help make the exception handling simpler and whether there are similar performance issues.