I my last post I did some performance testing to show how much of an impact try catch blocks have on performance.

In this post I want to have a look at node.js domains to see if they:

- Introduce a performance hit

- Provide a more straightforward way of mixing synchronous and asynchronous exception handling

So, we know that when we write an async function we need to return any sync errors as parameters to the callback see:

function readJsonWithTryCatch(filename, callback) {

fs.readFile(filename, function (err, content) {

if (err) {

return callback(err);

}

var data = JSON.parse(content.toString());

try {

var s = 0;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

} catch (e) {

return callback(e);

}

return callback(null, data);

});

}

In the previous post we showed that to get the best performance from this function we need to take the code between the try and catch and put it in an external function. We end up with quite a bit of exception handling boilerplate code with both the if(err) { return callback(err);} and the try catch blocks. If we needed to do some work prior to the async call to readFile we would have another try catch block for what is a rather simple function.

It's easy to forget one of these steps and end up with a function that doesn't work very well or crashes the node process. Can domains save us? First thing to work out is whether they incur a performance hit like try catch blocks do. So, testing the same functions as in the previous blog post but calling them using domain.run we get the following results: (GIST containing test code)

Control (readJSONWithoutTryCatchIntensive) x 27.21 ops/sec ±0.49% (67 runs sampled) readJSONWithoutTryCatchIntensive-Domain x 27.20 ops/sec ±0.45% (67 runs sampled) readJSONWithTryCatchIntensive-Domain x 27.04 ops/sec ±0.41% (67 runs sampled) readJSONWithTryCatch-Domain x 10.15 ops/sec ±0.50% (52 runs sampled) readJSONWithoutTryCatch-Domain x 18.04 ops/sec ±13.60% (47 runs sampled) Fastest is readJSONWithoutTryCatchIntensive-Domain ,Control (readJSONWithoutTryCatchIntensive) ,readJSONWithTryCatchIntensive-Domain

That's promising, the Domain hasn't added any performance overhead to our previous scenarios (See the tests in the previous post for comparison). So, what if we rewrite the functions to use domains internally, will that impact performance? It certainly could remove a great deal of boilerplate code as our readJsonWithTryCatch function above could become:

function readJsonWithInternalDomain(filename, callback) {

var d = domain.create().on('error', function(err){ return callback(err)})

fs.readFile(filename, d.intercept(function (content) {

var data = JSON.parse(content.toString());

var s = 0;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

for (var i = 0; i < j_; i++) s = i;

return callback(null, data);

}));

}

A much cleaner syntax in my opinion and far easier to spot if it has not been implemented correctly. However, does it incur a performance hit?

So, I tested the new internal domain function and a version with the intensive work in an external function, the performance results are: (GIST)

Control (readJSONWithoutTryCatchIntensive) x 27.09 ops/sec ±0.43% (67 runs sampled) readJsonWithInternalDomainIntensive x 27.04 ops/sec ±0.46% (67 runs sampled) readJSONWithInternalDomain x 18.40 ops/sec ±13.06% (47 runs sampled) readJsonWithTryCatch x 10.16 ops/sec ±0.49% (52 runs sampled) Fastest is Control (readJSONWithoutTryCatchIntensive) ,readJsonWithInternalDomainIntensive

That's great news, the Internal Domain versions perform similarly to their non try catch counterparts, and neither of them perform as poorly as the orignial try catch version. And since ReadJSONWithIntenalDomain and readJsonWithTryCatch are equivalent functions, we could say that using domains actually outperforms the try catch equivalent in certain circumstances. When you combine that with the simpler syntax, it seems a bit of a no brainer to me. My only concern is additional memory consumption, so let's do a quick test for that.

To test I used the fantastic performance monitoring service at nodetime.com .

So, I refactored each of the functions to recursively call themselves to a depth of 500 functions and then start again

function readJsonWithInternalDomainIntensiveRecursive(filename, callback) {

var d = domain.create().on('error', function(err){ return callback(err)})

fs.readFile(filename, d.intercept(function (content) {

var data = JSON.parse(content.toString());

intensive();

currentDepth++;

if(currentDepth < maxDepth){

readJsonWithInternalDomainIntensiveRecursive(filename, function() { return callback()});

}

else{

return callback(null, data);

}

}));

}

function readAgain() {

currentDepth = 0;

readJsonWithInternalDomainIntensiveRecursive("./test.json", readAgain)

}

readAgain();

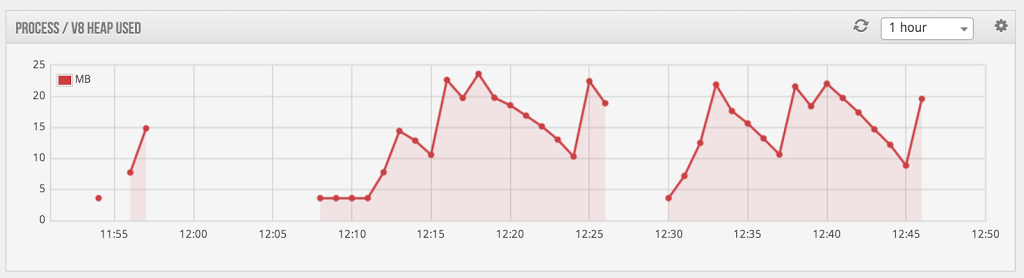

I ran the code above for 15 minutes and then tried it again with a try catch version of the function. The chart with the results is below:

Ignore the blips around 11:55 and look at the two 15 minute blocks. The first one from around 12:10 is from the version using domains and the second one around 12:30 is using try catch blocks. You could argue that the domain version uses slightly more memory than the try catch version at its peak, but it certainly isn't significant. And you have to consider that there are 500 domains on the heap at once in this scenario.

For me, this has removed any doubts I had about using domains as a replacement for try catch error handling. I will be madly implementing this style in all my projects and sadly might end up refactoring my current project with this style.